Many people believe that there is no need for improvement because wind turbines have been working for decades. Wind energy has the potential to be one of the world’s cheapest energy sources. In a recent article in the Science magazine, major challenges have been addressed to drive innovation in wind energy. Essentially three directions were identified:

- The better use of wind currents

- Structural and system dynamics of wind turbines

- Grid reliability of wind power

In order to make better use of wind currents, the air mass dynamics and its interactions with land and turbines must be understood. Our knowledge of wind currents in complex terrain and under different atmospheric conditions is very limited. We have to model these conditions more precisely so that the operation of large wind turbines becomes more productive and cheaper.

To gain more energy, wind turbines have grown in size. For example, when wind turbines share larger size areas with other wind turbines, the flow changes increasingly.

As the height of wind turbines increases, we need to understand the dynamics of the wind at these heights. The use of simplified physical models has allowed wind turbines to be installed and their performance to be predicted across a variety of terrain types. The next challenge is to model these different conditions so that wind turbines are optimized in order to be inexpensive and controllable, and installed in the right place.

The second essential direction is better understanding and research of the wind turbine structure and system dynamics . Today, wind turbines are the largest flexible, rotating machines in the world. The bucket lengths routinely exceed 80 meters. Their towers protrude well over 100 meters. To illustrate this, three Airbus A380s can fit in the area of one wind turbine. In order to work under increasing structural loads, these systems are getting bigger and heavier which requires new materials and manufacturing processes. This is necessary due to the fact that scalability, transport, structural integrity and recycling of the used materials reach their limits.

In addition, the interface between turbine and atmospheric dynamics raises several important research questions. Many simplified assumptions on which previous wind turbines are based, no longer apply. The challenge is not only to understand the atmosphere, but also to find out which factors are decisive for the efficiency of power generation as well as for the structural security.

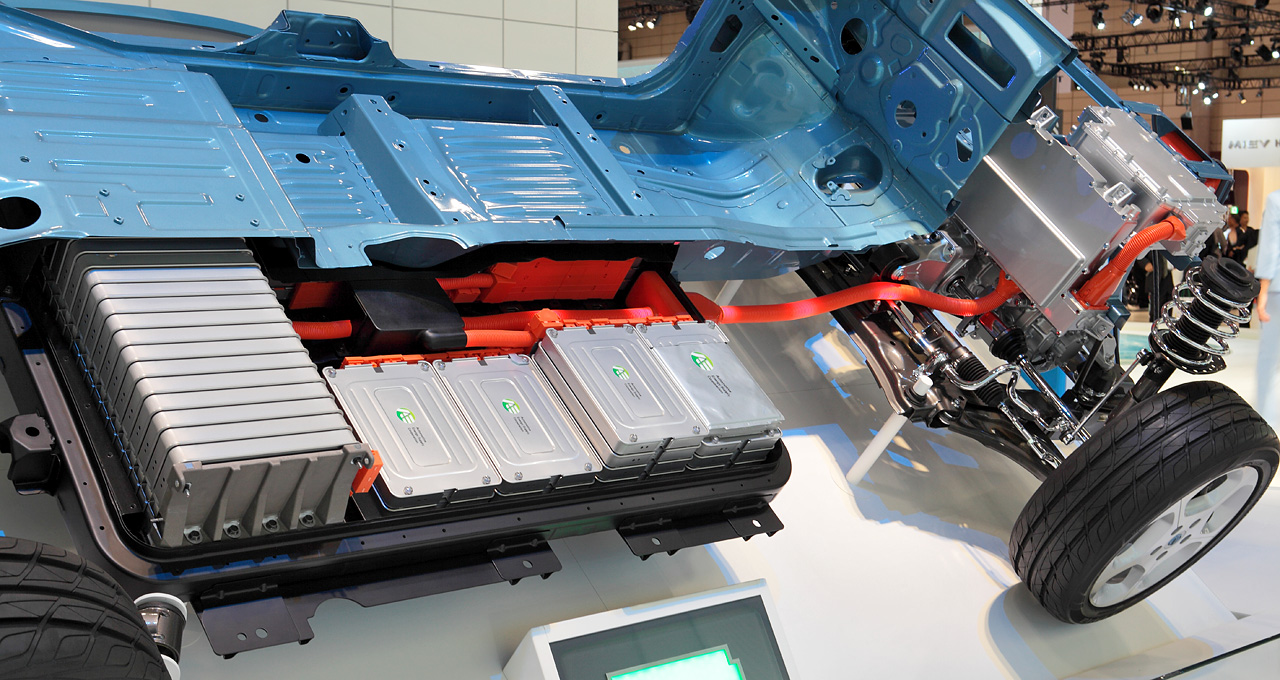

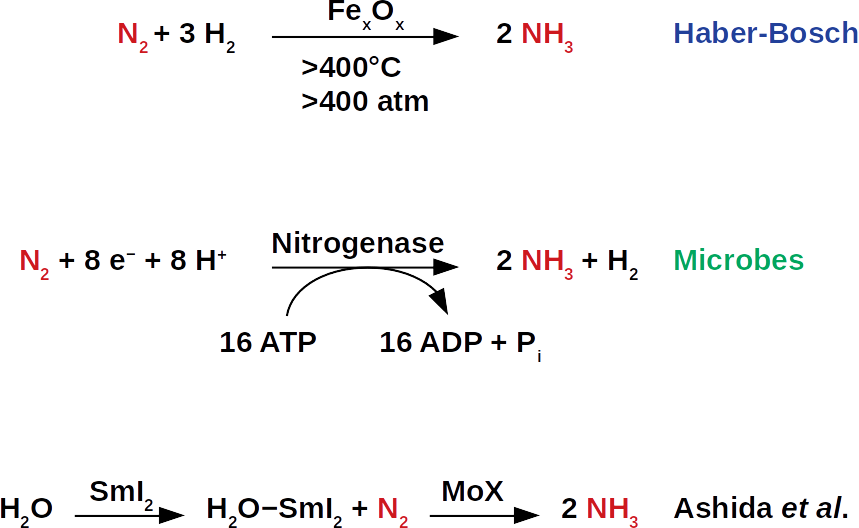

Our current power grid as third essential direction is not designed for the operation of large additional wind resources. Therefore, the gird will need has to be fundamentally different then as today. A high increase in variable wind and solar power is expected. In order to maintain functional, efficient and reliable network, these power generators must be predictable and controllable. Renewable electricity generators must also be able to provide not only electricity but also stabilizing grid services. The path to the future requires integrated systems research at the interfaces between atmospheric physics, wind turbine dynamics, plant control and network operation. This also includes new energy storage solutions such as power-to-gas.

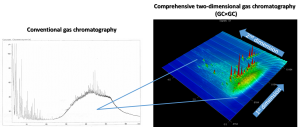

Wind turbines and their electricity storage can provide important network services such as frequency control, ramp control and voltage regulation. Innovative control could use the properties of wind turbines to optimize the energy production of the system and at the same time provide these essential services. For example, modern data processing technologies can deliver large amounts of data for sensors, which can be then applied to the entire system. This can improve energy recording, which in return can significantly reduce operating costs. The path to realize these demands requires extensive research at the interfaces of atmospheric flow modeling, individual turbine dynamics and wind turbine control with the operation of larger electrical systems.

Advances in science are essential to drive innovation, cut costs and achieve smooth integration into the power grid. In addition, environmental factors must also be taken into account when expanding wind energy. In order to be successful, the expansion of wind energy use must be done responsibly in order to minimize the destruction of the landscape. Investments in science and interdisciplinary research in these areas will certainly help to find acceptable solutions for everyone involved.

Such projects include studies that characterize and understand the effects of the wind on wildlife. Scientific research, which enables innovations and the development of inexpensive technologies to investigate the effects of wild animals on wind turbines on the land and off the coast, is currently being intensively pursued. To do this, it must be understood how wind energy can be placed in such a way that the local effects are minimized and at the same time there is an economic benefit for the affected communities.

These major challenges in wind research complement each other. The characterization of the operating zone of wind turbines in the atmosphere will be of crucial importance for the development of the next generation of even larger, more economical wind turbines. Understanding both, the dynamic control of the plants and the prediction of the type of atmospheric inflow enable better control.

As an innovative company, Frontis Energy supports the transition to CO2-neutral energy generation.