A significant part of the scientific community is investigating in impact of GHG emissions, and in particular CO2, on our past, present, and future climate. To model our future climate, researchers use CO2 emissions to estimate the future climate of our planet. They predict how much CO2 industry and households can emit to remain within the set 1.5°C target. The central question therefore is: How much of the emitted CO2 actually remains in the atmosphere? How much is metabolized or otherwise bound?

It seems the models have so far underestimated the capacity of biomass to incorporate CO2. That is because the models have now received a major upgrade from an analysis of radiocarbon data from nuclear bomb testing in the 1960s, which suggests that terrestrial ecosystems are capable of absorbing more CO2 than previously thought. Does that mean that the earth copes with more emissions?

Researchers at the Worcester Polytechnic Institute in Massachusetts found that plants are currently absorbing 80 million tons of CO2 each year. They published their finding in a recent article in the renown magazine Science. That is 30% more than previously assumed.

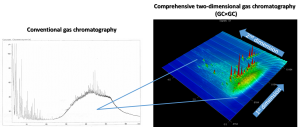

The initial idea was to take a closer look at the remnants of the nuclear tests in the 1950s and 1960s. Carbon dioxide molecules are made of carbon and oxygen. The radioactive substances that atomic bombs have left all over the world is also a radioactive carbon (14C). Like ordinary carbon (12C), the radioactive version is a possible component in CO2. After the atomic bombing tests, 14C got into the terrestrial biosphere through the photosynthesis of plants along with 12C. So one studies how 14C was enriched, the rates of the CO2 uptake in plants reveal how much carbon is captured.

Animals that feed on plants take in the same CO2 into their organism, as well as fungi and soil bacteria. Through decaying biomass in soil, carbon is then again released into the atmosphere in the form of CO2 and the cycle closes.

However, the question of is how much CO2 from the air goes into the ground and in is bound in biomass long term is anything but trivial. The analyzes of radioactive carbon now show slightly less 14CO2 in the atmosphere shortly after the nuclear tests. That is, plants worldwide would bind 80 gigatons carbon per year. So far, climate researchers assumed that storage performance was 43 to 76 gigatons.

The current assumptions were wrong due to the fact that mainly tree trunks were used for the calculation. Carbon stored in wood has been bound for many decades or centuries. The new study looked at non-wood biomass such as leaves, which also store a large proportion of carbon. Plus, extensive underground plant biomass received hitherto too little attention. As a rule, underground biomass is comparable to what’s found above ground. In particular, only the woody roots were taken into account, but not the fine rhizosphere which has even more mass and accordingly binds more carbon.

Eighty gigatons are not only significantly more than is used in the common climate change forecasts. It is also twice as much as the annual man-made CO2 emissions (37.2 billion tons).

Unfortunately, this does not mean that there is nothing to worry about. Living creatures also use biomass as a fuel. Almost the same amount of CO₂ is released again when plants lose their leaves in autumn, which then serve soil creatures as a source of food. These natural CO2 emissions from the biosphere have accelerated more since the 1960s.

Overall, photosynthesis can get only 30% of the total man-made CO2 emissions from the atmosphere and can therefore not make up for fossil fuel consumption. With large natural areas available for photosynthesis more CO2 could be extracted from air. In consequence, more and not fewer forests and meadows need to be re-naturalized.

Some climate models are based on wrong assumptions. And yet, the more important carbon storage is not on land, but in the water. CO2 dissolves better there and countless micro-algae in the oceans also carry out photosynthesis. Carbon is very popular as building material for shells. In total, the oceans 16 times more carbon than the biosphere on land.

The key findings include:

- Terrestrial vegetation and soils might absorb up to 30% more CO2 than what was estimated by earlier models.

- The carbon storage in these ecosystems is more temporary than once believed, implying that man-made CO2 may not remain in the terrestrial biosphere as long as current models suggest.

- The discrepancy in the models is due to underestimating the carbon stored in short-lived or non-woody plant tissues, as well as the extensive underground parts of plants, like fine roots.

The implications of these findings are significant for climate predictions and crafting effective climate policies. It highlights the need for more accurate representation of the global carbon cycle in climate models. While this increased uptake of CO2 by vegetation is a positive sign, it does not negate the urgency of reducing carbon emissions to combat climate change.